Bayesian Deep Learning for Distribution Prediction of TLS Uncertainties

© GIH

© GIH

| Led by: | Jan Hartmann, Hamza Alkhatib |

| E-Mail: | jan.hartmann@gih.uni-hannover.de |

| Year: | 2024 |

| Date: | 01-12-23 |

Background:

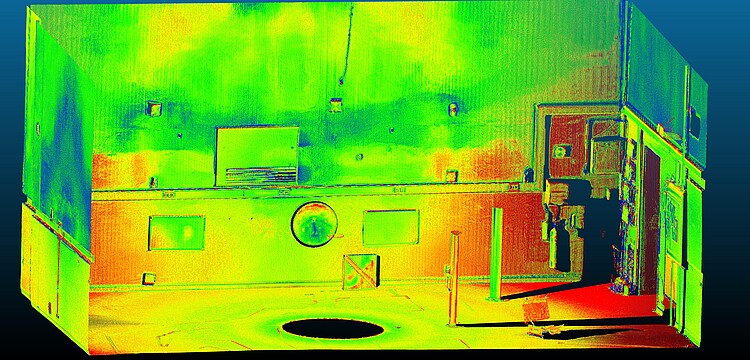

In modern geodetic applications, Terrestrial Laser Scanning (TLS) is a key technology for the acquisition of detailed 3D information. While TLS has demonstrated remarkable capabilities, the need for robust uncertainty modelling is becoming increasingly apparent in critical applications such as deformation analysis. An accurate understanding and quantification of systematic deviations in TLS measurements is essential to ensure the reliability of 3D data. This research seeks to address this aspect by investigating the uncertainties within TLS data, in particular the systematic deviation in distance measurement.

Objective:

The primary aim of this work is to enhance the quality of uncertainty modeling in TLS. Initial attempts using Gradient Boosting Trees and Deep Learning models have shown promising results in modeling systematic effects. Existing models, limited to representing systematic deviations as point estimates, highlight a gap in achieving comprehensive uncertainty modeling. This study aims to fill this void by leveraging Bayesian Deep Learning approaches. Specifically, we seek to predict not only the distribution of systematic deviations in TLS but also account for the inherent variability in both systematic and random deviations. By incorporating Bayesian principles into deep learning models, we aim to provide a more nuanced understanding of TLS uncertainty, capturing both mean and standard deviation of systematic deviations while accommodating the stochastic nature of random deviations.

Specific Methodology:

Using deep learning models (e.g. PointNet and PointNet++), the investigation aims to predict distribution functions for TLS uncertainties. This involves not only an exploration of the capabilities of the deep learning models, but also a critical examination of how the loss function needs to be defined. Understanding the nuances of the loss function is paramount to effectively predicting the distribution of the uncertainties. Therefore, the study will systematically evaluate different loss functions to determine the most optimal one for our specific problem.

Provides Material:

- Processed dataset containing TLS point clouds, with pre-existing difference from the reference point cloud.

- For validation: Pre-trained models on this dataset, including XGBoost and PointNet models.

Programming Requirement:

Proficiency in Deep Learning frameworks, particularly in Python, is essential.